Lila’s Nvidia-backed raise is about more than money. It is an attempt to industrialise discovery—and to sell it as capacity to pharma, chipmakers and energy groups.

On 14 October, Lila Sciences said it had added $115mn to its Series A, bringing the round to $350mn, total capital raised to roughly $550mn and its valuation to more than $1.3bn. Nvidia’s venture arm joined the extension. Lila also disclosed a plan to commercialise its platform beyond life sciences and to build out a newly leased 235,500-square-foot site in Cambridge, Massachusetts.

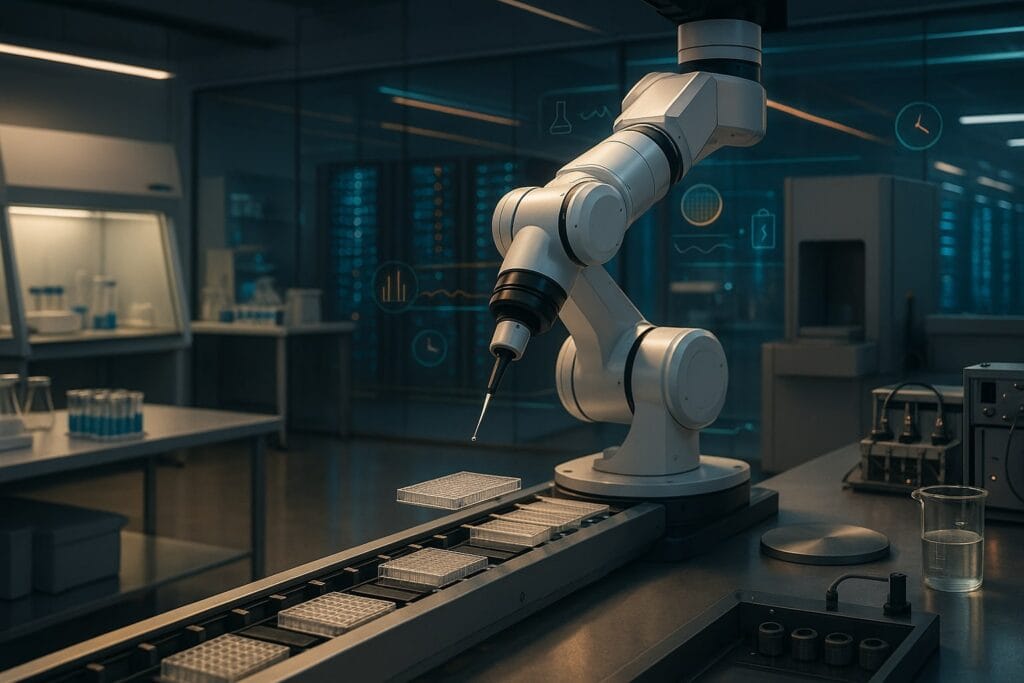

Those are eye-catching numbers. But the more interesting shift is strategic. Lila is not pitching another internet-trained model that passively consumes public data. It is building what it calls AI Science Factories: robotic laboratories orchestrated by specialised AI models that run experiments continuously, generating proprietary datasets that feed the next round of hypotheses. The company’s own note on the financing frames the mission crisply: use the money to accelerate factory build-out and bring its “scientific superintelligence” to customers working on hard problems—well beyond biotech.

Founded by Flagship Pioneering and unveiled in March with a $200mn seed, Lila’s positioning has been consistent: treat the scientific method itself as an automatable production line. That seed was earmarked explicitly to build the first factories.

Table of Contents

ToggleA new asset class: science-as-capacity

Investors have seen versions of this movie in other industries. The cloud turned computing into metered capacity; TSMC turned chipmaking into a global foundry service. If Lila succeeds, “discovery capacity” could become an asset class in its own right: customers book throughput on a standardised wet-lab + model stack, pay for results, and accelerate their own R&D without building a factory.

Lila is explicit that non-biotech customers are part of the plan. Reuters reports the company will sell enterprise software access to firms in energy, semiconductors and drug development. That matters. A broader customer mix can smooth biotech’s boom-bust cycles, front-load revenue (software and service before drug milestones) and shorten sales cycles because materials and process industries often have faster feedback loops than clinical development.

The near-term commercial test is practical: can Lila define units of work that feel productised to a procurement team? In batteries, that could be “screen 10,000 electrolyte formulations under X safety and Y temperature constraints and deliver top-5 candidates with reproducible results.” In semiconductors, “optimise a deposition recipe under predefined reliability metrics.” The stronger the standard unit, the closer this looks to capacity that scale investors know how to underwrite.

What Lila is—and isn’t

The temptation is to lump Lila in with AI-in-drug-discovery outfits. It is different in emphasis. Companies such as Recursion and insitro have pushed hard on scaled datasets and compute. Recursion built BioHive-2—described as the largest supercomputer in the pharmaceutical industry—on Nvidia infrastructure to process tens of petabytes of biological and chemical data and to train larger foundation models.

Insitro, meanwhile, has shown a steady cadence of top-tier partnerships that monetise model-driven discovery. In the last day it extended a collaboration using its ChemML platform to design medicines for a novel ALS target—another data-rich, model-first programme with a large-pharma counterpart.

Lila’s bet is complementary but distinct: own the experimental data-generation loop at scale across domains, not just the model. That is why the Cambridge footprint and robotics stack are strategically important. In AI, the most durable moats are often proprietary datasets and feedback systems. If you can run the experiment, close the loop and keep the data, you can iterate faster than teams limited to public or partner-provided corpora. Reuters’ framing—emphasising proprietary scientific data produced via original experiments—captures that difference.

Why this is happening now

Three currents converged to make the “science factory” plausible:

- Industrialised lab ops. A generation of contract labs and automation vendors has standardised key pieces: liquid handling, imaging, sample logistics and EHS compliance. That lowers the fixed-cost shock of building a 24/7 facility.

- Foundation models for the bench. The model frontier has moved from task-specific to general-purpose representations (phenomic, structural, reaction-condition models) that can suggest experiments—not just analyse results. Recursion’s work on phenomic foundation models is one example of this maturation, albeit in a different stack.

- Capital wants tangible AI. In a crowded market for text-and-image models, investors are eager for AI with non-speculative cash flows. Factories produce metrics—utilisation, cycle time, yield—that public markets understand.

The economics that will make or break it

Factories are capital-hungry and unforgiving. Three levers will determine whether Lila’s model scales:

- Utilisation. Downtime kills returns. The goal is stable, high utilisation with mix-resilient workflows (assay types and materials that can be scheduled flexibly). Think airline ops, not artisan lab benches.

- Cycle time & re-run rate. The selling point is speed from hypothesis to validated result. If re-runs due to QC issues creep up, cycle time balloons and margin collapses.

- Standardisation vs. custom work. The more a project looks like custom consulting, the worse the gross margins. The more it looks like SKU-able capacity with well-defined interfaces (inputs, safety constraints, deliverables), the better.

Lila’s choice to sell software access alongside factory output is notable: software can both prime demand and discipline it. Enterprise users can explore design spaces virtually, reserve only high-probability runs and then commit scarce factory slots to the most informative experiments. The expansion plans and cross-industry sales ambitions reported this week suggest the company understands that interlock.

Competition—and complementarity

It is easy to cast this as a race between models and robots. The reality is more symbiotic. Model-heavy shops need fresh, labelled data that actually move decision boundaries. Factory-heavy shops need models to prioritise the next most valuable experiment. Expect more two-way deals: model providers reserving factory capacity during model development, and factory operators licensing or co-developing domain-specific models to sharpen their throughput.

Two recent data points support that direction of travel. First, Nvidia’s presence on Lila’s cap table aligns the factory story with the compute ecosystem that already powers biopharma AI. Second, the same 24–48-hour window saw new or expanded alliances between AI shops and big pharma (Takeda’s multi-year expansion with Nabla Bio includes double-digit-million upfront payments and up to $1bn in milestones), underscoring that demand for differentiated AI-enabled discovery capacity is broadening, not narrowing.

What success looks like in 12 months

If readers want an investor’s checklist, here are the proof points that would show the “science factory” is more than branding:

- First non-biopharma reference account. A paying energy or semiconductor customer with measurable ROI—a better catalyst, a tighter process window, a yield or lifetime gain—would validate the cross-sector thesis. (Reuters flags these sectors explicitly as targets.)

- A published capacity metric. e.g., X million experimental “decisions” per quarter with a Y% successful replication rate across labs.

- Software-to-factory conversion rate. What proportion of in-silico designs advance to physical runs—and how often do those runs retire uncertainty rather than simply confirm bias?

- Time-to-project start. From signed contract to the first experiment on a robot: weeks, not months.

- Multi-tenant scheduling without drama. Evidence that battery screens, protein engineering and materials discovery can coexist without cross-contamination or throughput hits.

The risk ledger

Capex & depreciation. Even with venture backing, carrying a large fixed asset base through macro cycles is hard. If capacity ramps slower than expected, depreciation eats margins.

Reliability and EHS. A factory for experiments must be boringly safe. That means robust environmental controls, waste handling, and validation baked into every step. Outages that would be a nuisance in a software-only business can become reputational risks here.

Vendor concentration. Over-reliance on single-source robotics or reagents introduces supply-chain fragility. The smarter operators will dual-source and design for substitution.

Data rights. The value of factory-generated data depends on the licence. If every customer insists on exclusivity, network effects disappear. If the operator hoards too much, customers balk. Expect creative data-sharing constructs (e.g., pooled, anonymised embeddings) that preserve advantage while keeping customers on side.

Why the timing matters

The past two years taught investors that AI without proprietary data and fast feedback is theatre. Lila is one of the first well-capitalised attempts to solve for both at once: own the data loop, sell the capacity, and point it at markets that can pay now. It will not be the only one. The rest of 2025 will likely bring copycats, partnerships and, if the non-biopharma pipeline matures, the first “science factory” revenue that looks like a real, repeatable product line.

In a week when headlines also included a $600mn obesity-drug financing and another big-pharma AI collaboration, Lila’s fundraise could have been just more noise. It isn’t. It is a marker for a broader shift: away from AI that explains science and toward AI that does science—faster, cheaper and, crucially, at industrial scale.